AI in Criminal Justice & Facial Recognition

[Note: This article is part 3 of a series on AI Ethics and Regulation. The previous installment, on credit scoring, can be found here.]

Al-Driven Risk Assessment in Criminal Justice

The use of AI for risk assessment in the criminal justice system has provoked widespread controversy. Endless amounts of digital ink have been spilled writing about COMPAS, for example, ever since ProPublica first subjected the algorithm to public scrutiny in 2016, in a groundbreaking piece of investigative journalism that proved to be a catalyst for seminal research in algorithmic fairness. It is hard to think of many other algorithms whose analysis has reached such Talmudic levels of scholarship and scrutiny, a fact that is made even more remarkable considering that COMPAS is proprietary, and which throws into doubt commonly made claims about the difficulty of obtaining information about algorithmically made decisions (as opposed to decisions made by humans). The main charge leveled against COMPAS by ProPublica’s initial analysis was that the COMPAS algorithm doesn’t satisfy a particular group-oriented fairness criterion known as equalized odds (also known as error rate parity), and consequently its results are unfairly biased against Black defendants.1 However, it turned out that the algorithm does satisfy other definitions of fairness, such as predictive parity and calibration. Moreover, it was subsequently proven mathematically (I will present such proofs in detail in the sixth installment of this series) that these different group-based notions of fairness are fundamentally incompatible, in that as long as the base rates of the target random variable are unequal across different groups, as is almost always the case in practice, then it is demonstrably impossible for any decision-making system, algorithmic or human, to satisfy both equalized odds and either predictive parity or calibration. In other words, had COMPAS satisfied equalized odds, it would have necessarily violated both predictive parity and calibration. Finally, none of these metrics is able to properly capture our pretheoretic conception of fairness, and there is no consensus on which metric is objectively better or even under what circumstances one should be preferred over another.

Importantly, we will also see that there is often an inherent tension between fairness and accuracy, whereby, generally speaking, the only way to make an algorithm more fair is to make it less accurate. This can have significant real-world consequences when inaccurate predictions translate to releasing defendants who go on to commit crimes or granting loans to applicants who end up defaulting. By examining the same COMPAS data from Florida’s Broward County that ProPublica analyzed, researchers showed that satisfying even a weaker version of equalized odds (the fairness property that ProPublica chose to focus on), namely false positive parity, would “substantially decrease public safety.” Likewise for other fairness criteria, such as demographic parity and even conditional demographic parity; the only way to attain them would be to compromise public safety.

Intuitively, this is because conditions such as false positive parity are over-constraining, and thus the set of models that satisfy such conditions is a small sliver of the overall space of models. We will get a concrete flavor of this phenomenon later on when we formalize these fairness notions in the setting of SMT (satisfiability-modulo-theories) and carry out automated model-finding experiments in a controlled setting. Conversely, the researchers found that maximizing public safety would violate these particular fairness metrics. There is some debate in the literature about the degree of the tension between fairness and accuracy, which can vary from one domain to another, but there is no denying the tension’s existence. So there are difficult policy tradeoffs to be made, though at least in the case of algorithms we have fixed Pareto frontiers that are amenable to mathematical exploration, the results of which can inform public policy so that precise choices can be transparently made and implemented—a luxury that is not available in the case of human decision making.

Ignoring these fundamental complexities and simply demanding that any algorithm must satisfy a fixed set of fairness properties, particularly properties such as equalized odds or demographic parity that are exceedingly constraining and have an adverse impact on accuracy is naively—and dangerously—misguided. Yet that seems to be where things are headed. Witness, for instance, Idaho’s H.B. 118 bill, introduced in 2019, which demanded that no “pretrial risk assessment algorithms” shall be used in the state of Idaho unless “first shown to be free of bias against any class of individuals protected from discrimination by state or federal law,” where “free of bias” was defined so as to require error rate parity (“the rate of error is balanced as between protected classes and those not in protected classes”). The bill was warmly greeted by the American Bail Coalition, an industry group and lobbyist for the bail bond insurance business that is dedicated to “preserving for-profit bail” (see the remarks below about the bail bond industry in the U.S.), but also by the ACLU, an odd couple of bedfellows if there ever was one. ACLU’s reaction to the bill included the false claim that “these tools have been well documented to have been programmed with racial bias” along with proposals to go much further, by subjecting the tools to yearly audits. The bill was also approvingly cited by the “Blueprint for an AI Bill of Rights” that the White House issued in 2022, which specifically singled out the bill’s demand that all algorithms must first be shown to be “free of bias” before they can be used. Thankfully, the error rate parity requirement was eventually abandoned: The “House Judiciary Committee struck that language after becoming “bogged down” in discussion of the technical requirements for validating bias or lack of bias.” Nevertheless, the mere fact that it was ever introduced and warmly received should serve as a cautionary tale. However well-intentioned they may be, regulatory attempts like the original version of the Idaho bill bring to mind Indiana’s infamous pi bill, which attempted to legislate the value of the constant π. Regrettably, what we would like and what reality will allow do not always coincide.

Complicating matters further, and this is a critical point that has been overlooked in the computer science literature on fairness, as I already pointed out in the first article of this series, the sort of interventions that are necessary to make an algorithm more fair, such as using different prediction thresholds for different protected groups (or other similar “algorithmic affirmative action” measures), are liable to attract strict legal scrutiny and might well end up being proscribed on constitutional (equal protection) grounds, if not on statutory grounds as well (e.g., Title VII), since they subject individuals to facially disparate treatment. Similar concerns might even arise in the EU, though European law generally gives wider latitude to substantive conceptions of non-discrimination, whereas U.S. jurisprudence has consistently moved towards more formal (“anti-classificationist”) readings of non-discrimination. (I will discuss these issues in detail in a forthcoming article in this series, and will later draw connections between them and corresponding technical distinctions, such as that between individual- vs group-oriented fairness metrics.) Finally, even brushing aside concerns about their legality, algorithmic fairness interventions at any stage (pre-, in-, or post-processing) raise uneasy ethical questions that need to be addressed—another salient point that is often overlooked.

COMPAS has been criticized on other grounds as well, for instance, its accuracy is only about 65%. That certainly doesn’t sound like stellar performance, but let’s again remind ourselves to ask the key question—compared to what? As Bagaric et al. point out, subjective risk assessments by bail magistrates (or by members of parole boards and other legal professionals) “are notoriously inaccurate—in fact, they are barely more accurate than tossing a coin.” Research shows that

the sentencing decisions of experienced legal professionals are influenced by irrelevant sentencing demands even if they are blatantly determined at random. Participating legal experts anchored their sentencing decisions on a given sentencing demand and assimilated toward it even if this demand came from an irrelevant source (Study 1), they were informed that this demand was randomly determined (Study 2), or they randomly determined this demand themselves by throwing dice (Study 3).

As stressed earlier, these results are not cherry-picked outliers. The amount of empirical evidence in the literature militating against the ability of people to make sound judgments under uncertainty is overwhelming and cuts across all spheres of human activity, from the workplace to health care to law and beyond.2

It is widely acknowledged that the criminal justice system in the U.S. is deeply flawed, plagued by massive incarceration rates—the highest in the world—and severe unfairness towards poor people, who are often members of racial and ethnic minorities. Pretrial detention is a particularly acute problem. In theory, after someone is arrested (perhaps wrongfully), they should be granted the constitutional presumption of innocence and be able to return home until given the opportunity to defend themselves in court, unless there is compelling evidence to suggest that releasing them will compromise public safety. In practice, however, defendants are routinely required to pay bail in order to get out of jail. Most poor people simply do not have the wherewithal to do that and are therefore kept languishing in jail until their trial, a period of time that can stretch to months or even years. At any given time there are anywhere from 400K to 500K people in local jails because they cannot afford bail, the large majority of them charged with low-level offenses. (To avoid this fate, which often entails job and housing loss and can upend a person’s life, impacting everything from their personal relationships and families to their health and education, many poor people turn to for-profit bail bond lenders, who lend them the bail cash but charge about 10 to 15 percent of the amount as a non-refundable fee and secure the loan with collateral on the defendant’s property; insuring these bail bond lenders is a $15-billion industry in the U.S.) Algorithmic risk assessment tools can play an important role in mitigating this problem, as demonstrated by the adoption of the PSA (Public Safety Assessment) algorithm by the state of New Jersey in 2017. PSA is intended to help judges in making pretrial release decisions by computing a numeric score indicating the risk that the defendant might commit a crime before their trial or might fail to appear in court. The algorithm makes a recommendation but ultimately it is up to humans to make the final decision, with the prosecutors and lawyers often duking it out in front of the judge.

A 2019 report on the results of adopting PSA in New Jersey found that “fewer arrest events took place following” the adoption of PSA, specifically with “a reduction in the number of arrest events for the least serious types of charges — namely, nonindictable (misdemeanor) public-order offenses,” while “pretrial release conditions imposed on defendants changed dramatically as a result,” with “a larger proportion of defendants released without conditions”. The reform “significantly reduced the length of time defendants spend in jail in the month following arrest” and “had the largest effects on jail bookings in counties that had the highest rates of jail bookings before [the reform]”. An evaluation by the state’s Administrative Office of the Courts (AOC) found that “the PSA has been remarkably accurate in classifying a defendant’s risk,” adding that “as risk scores increase, actual failure rates of compliance increase in step.” And while Black defendants remained overrepresented in the pretrial jail population, the report also highlighted that, by using PSA, New Jersey incarcerated “3,000 fewer black, 1,500 fewer white, and 1,300 fewer Hispanic individuals”. These numbers are consistent with early reports of PSA’s impact: “Six months into this venture, New Jersey jails are already starting to empty, and the number of people locked up while awaiting trial has dropped,” with court officials saying that:

the early numbers show the new process is already working: people who aren’t dangerous are not being jailed solely because they can’t afford bail, and dangerous people aren’t being released even though they can afford to pay. ’You can disagree with an individual decision, but you can’t deny the systemic change,’ said Glenn Grant, who manages the reforms as acting administrative director of the New Jersey court system.

Moreover, “serious crime offenses, which include murder, rape, aggravated assault and burglary, fell to 164,965 in 2020 from 212,346 in 2017, according to the New Jersey government records”. It is also consistent with mathematical analyses of existing datasets and with policy simulations, which have shown that algorithmic risk assessments in the criminal justice system can reduce crime “by up to 24.8% with no change in jailing rates, or jail populations can be reduced by 42.0% with no increase in crime rates,” causing reductions “in all categories of crime, including violent ones,” while, “importantly, such gains can be had while also significantly reducing the percentage of African-Americans and Hispanics in jail.” It is therefore puzzling that progressive organizations like the ACLU and the NAACP choose to throw the baby out with the bathwater by rejecting the use of algorithmic tools such as the PSA, while acknowledging all the listed improvements, simply because these tools do not magically achieve demographic parity in jail populations, a laudable goal but one that can only be achieved by comprehensive long-term social change.

We will return to the use of AI (and algorithms in general) in the criminal justice system later, and particularly to COMPAS, which will be discussed in much greater detail, but the facts listed above highlight the promise of the technology and the irrationality of the opposition to it.

Facial Recognition

The case of facial recognition technology (FRT) is more nuanced. FRT raises some novel issues and does call for some carefully targeted regulation. And it is very likely that such regulation would have already been enacted in the U.S. if it weren’t for the fact that many FRT opponents are abolitionists who have taken a “ban or nothing” approach to the subject, and whose “inability to compromise to some regulation” has had “a real cost,” as law professor Andrew Guthrie Ferguson puts it. At any rate, the key issues regarding FRT are primarily about privacy and consent, and have little to do with the other general “AI concerns” that are bandied about and with which they are usually lumped. They are more akin to the privacy and discrimination concerns surrounding biometric data in general, including, e.g., fingerprints, palm scans, iris patterns, and DNA. These are issues that have been around for a very long time and can be addressed by privacy-focused regulation; dragging them under the AI umbrella simply because FRT systems happen to be implemented via ML models is sloppy thinking that focuses on the how instead of the what. Moreover, as in other cases, the most serious threats posed by FRT are potential abuses of the technology by state actors for population surveillance and control (a concern that is not limited to authoritarian regimes like those of China), not so much by the CCTV at your local Starbucks drive-through or the FRT in your dorm’s M&M vending machine.

One of the novel issues raised by FRT has to do with scale. As mentioned earlier, decision-making systems often have fixed boundaries that are exogenously determined, so it is misguided to make the general claim that algorithmic decision-making systems can magically introduce greater amounts of bias simply by virtue of operating at scale. But often is not always, and FRT is a case in point. Using FRT, it is possible to instantly scan and analyze the face of every person entering the JFK airport on a given day (more than 150,000 people on average), or every person attending a concert, a football game, or a political protest, which would simply not be feasible manually. This represents a novel class of use cases that did not even exist before the technology emerged. Some of these, such as the use of FRT for surveillance of political protests, are probably best banned completely. Other scenarios need more debate. For one thing, precisely because of their novelty and scale, such use cases impose accuracy demands that render human performance baselines irrelevant. If 170,000 people enter JFK on a given day, a precision of 99.9% might not be good enough for FRT, since it would translate to 170 people being wrongfully identified and possibly detained for questioning. Whether or not that is acceptable, or whether to demand a higher precision, must be determined on a case-by-case basis by policy makers and the relevant stakeholders, including the affected public (or at least their elected representatives), hopefully through a transparent, data-driven, and rational cost-benefit analysis. But the fact that “the average person makes between 20 and 30% errors when performing” recognition tasks involving unfamiliar faces would be neither here nor there in such cases, because we’re not talking about FRT replacing humans, we’re talking about using FRT to do something that humans have never done before (and couldn’t possibly do on their own).

Nevertheless, there are other controversial uses of FRT where comparisons with existing baselines can be made more meaningfully, if still imperfectly. The most prominent one is the use of FRT in forensic investigations, where a photo of a suspect caught in a criminal act, obtained from CCTV footage (or a bystander’s phone, a doorbell camera, and so on), is compared against a database of images and results in a ranked list of possible matches exceeding a certain similarity threshold. Police officers can then pursue those matches as they see fit. Thus, this workflow has humans in the loop and does not rely on FRT as an autonomous decision making system. Of course, insofar as humans themselves have high error rates when performing facial recognition under those circumstances, having one in the loop might not be much of a guardrail. However, it is important to note that FRT matches, no matter how they are appraised by law enforcement officers, are considered investigative leads only, not “positive identifications” and most certainly not probable causes for arrest; additional evidence uncovered by further investigation is required for an arrest warrant.3 This is usually printed in bold capital letters in the reports that are returned by FRT matches; it is also mandated in most jurisdictions that use FRT (see, for instance, New York City’s policy). The same general procedure is routinely followed using DNA samples from a crime scene, if any are available, fingerprints, hair, and so on. Such evidentiary samples are matched against national databases like IAFIS or CODIS. IAFIS dates back to the early 1970s, and earlier federal versions were introduced as early as 1924. (The use of fingerprints as a law enforcement technique was first introduced in the U.S. in 1903 and was already in use by most police departments a few years after that.)

Concerns about FRT in this setting fall into three categories. The first and most pressing is the technology’s inaccuracy due to the imperfect operating conditions under which the evidentiary images, also know as “probe” images, are often obtained (poor lighting or pixelation, grainy footage with low resolution, occlusion, obscuring angles, unusual poses, etc.), as opposed to the more forgiving conditions under which FRT systems are usually evaluated in the lab; the passage of time since the images in the database were taken; and so on. Second, there are charges of demographic bias alleging that FRT performance is significantly worse for racial minorities and women, leading to higher false positive rates for those groups. And finally there are privacy concerns. We’ll start the discussion with privacy and then we’ll move to performance and bias.

The first thing to note about the privacy concerns regarding the use of FRT in criminal investigations is that they are not novel; they are similar to the privacy concerns about fingerprints and other types of biometric data that have been raised for many decades. For instance, more than half of the states in this country allow federal law enforcement agencies access to their databases of state ID photos, obtained from local DMVs, so that they can be used in FRT searches. As a result, there’s a good chance that a randomly selected American’s driver’s license photo is routinely cross-referenced with suspects’ pictures, as the FBI alone performs many thousands of FRT searches against DMV databases each year.4 These searches are similar to searches of fingerprint databases, which have been standard practice for decades. However, those databases comprise mostly—though not exclusively—fingerprints of people who have been arrested, and there are compelling procedural and public safety arguments for storing and searching those.5 By contrast, when someone has their photo taken for their driver’s license at the DMV, that person is not having an interaction with the criminal justice system. They are under no legal obligation to consent to their photos being used in criminal investigations—not that they are ever asked for consent. Indeed, most people are completely unaware that their photos are being put to such use.

On the other hand, these FRT searches involve a single static photo, not dynamic real-time images, and this fact underscores a significant distinction in surveillance intensity and privacy expectations. The static nature of state ID and DMV photos means that the conducted surveillance is not continuous or invasive like real-time CCTV or camera monitoring, which can track an individual’s movements across different times and places and can capture a variety of potentially very private behaviors without their consent.6 The discrete use of a single image, which is obtained by the state for administrative purposes in the first place, is inherently less intrusive and more controlled. Secondly, to expand on the point about the “administrative purposes” of these photos, the nature of driver’s license and state ID photos suggests that individuals might not have a reasonable expectation of privacy regarding these images in the same way they do regarding their own private photos. When an individual has their photo taken for a driver’s license, they are engaging in a process that inherently involves identity verification by the state, with the understanding that these photos serve as a means of public identification. They are intended to be shown to and recognized by others, including law enforcement, for the purpose of verifying identity. When these considerations are made in the context of today’s digital age, where personal images are so frequently shared and readily accessible, they suggest that expectations of privacy around a government-issued ID photo that is specifically taken for identification purposes are diminished. Finally, to reiterate the point that was made earlier, the use of DMV photos in FRT searches is typically carried out by federal agencies only, such as the FBI, DEA, ICE, and DHS. Local law enforcement agencies (e.g., the NYC police department) are often constrained by state laws and community standards and only perform FRT searches against mugshot databases. The use of DMV photos by federal agencies can be supported by their need to cast a wider net across the entire population instead of limiting their searches to previously arrested individuals, in order to identify suspects involved in crimes that cross state lines or have national security implications.

These considerations are only intended to highlight the complexity of the issues and to advocate for a nuanced approach rather than a binary all-or-nothing perspective; they do not mean that regulation of FRT searches is not needed—it is. There is a long historical record, stretching from the days of COINTELPRO to more recent developments with mass NSA surveillance and the bypassing of FISA, showing that data mining mechanisms introduced for ostensibly legitimate purposes can be subverted by federal authorities, so safeguards must be put into place to prevent abuse. Further, while explicit consent might not be required ultimately, public awareness through comprehensive disclosure about the use of FRT in criminal investigations might be needed to enforce transparency and to avoid breeding distrust in public institutions.

On the performance front, the main concern from the perspective of civil liberties has been with false positives (type I errors), captured by the FMR metric (“false match rate”), since they are the errors that can lead to the wrongful detention of innocent people. From a criminal investigator’s perspective, FNR, the false negative rate, which represents type II errors (and is the same quantity as 1 - recall), is even more important in order to ensure that suspects don’t go undetected. Accordingly, investigators usually like to get at least a few dozen matches, which can then be evaluated and investigated by trained humans.

FRT performance remains imperfect on both counts, but it has been improving rapidly, to the point where benchmark results from even a year or two ago are already obsolete. FRT error rates have been reducing annually by approximately a factor of 2, i.e., they have been cut in half with each passing year. Even back in 2019, NIST, which is the most authoritative source of comprehensive evaluation data for commercial FRT systems, recognized that “error rates today are two orders of magnitude below what they were in 2010, a massive reduction” and that the best-performing systems in 2019 attained “close to perfect recognition.” They are approaching the performance of fingerprint identification systems, which is considered the gold standard in forensic investigation accuracy (though fingerprint technology has attracted its own share of scrutiny). There is still a performance drop when deploying FRT “in the wild,” but the top algorithms are getting much better at recognizing faces in poor-quality images and can even identify someone in photos taken 18-20 years apart.

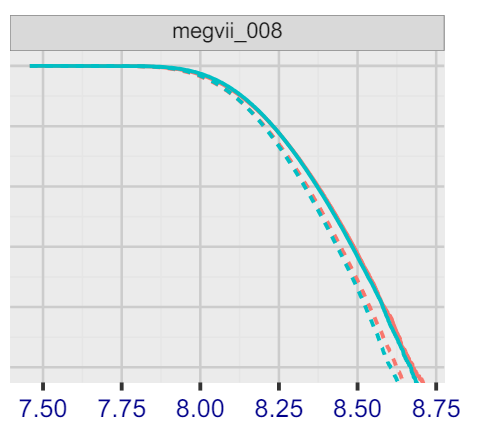

Let us now turn to the bias charges claiming that FRT works better on White subjects than it does on Black and darker-skinned individuals, and better on males than on females. These claims are largely true, but also in need of some important qualifications. To this day, it is indeed the case that most FRT algorithms have higher positive rates for black women than they do for any other demographic group. For instance, looking at figures 255 through 282 (pp. 333–361) of the FRT evaluation report from NIST that came out on February 21 shows that, for most algorithms, the solid red curve is consistently higher than the other three curves: solid blue, dashed blue, and dashed red. Consider, for example, Fig. 255:

The colors here code race (red for black, blue for white), while solid curves correspond to women and dashed curves to men. On the x axis we have confidence thresholds indicating the minimum confidence that the system must have in order to declare a match, increasing as we move from left to right. On the y axis we have the algorithm’s FMR (False Match Rate). As one would expect from the classic precision-recall tradeoff, FMR values consistently drop as the confidence thresholds increase, and this happens across all demographic groups. For a given algorithm, when a curve corresponding to a group g₁ is higher than the curve corresponding to a group g₂, this means that the algorithm has a higher FMR (produces more false positives) for g₁ than it does for g₂, no matter the threshold (except for negligibly small thresholds, which lead to error rates so uniformly high that differences across demographic groups vanish). And again, as can be seen from the graphs, for the large majority of algorithms the solid red line (black females) is the highest curve, while the dashed blue line (white males) is the lowest. It is also interesting to note that performance is often better for black men than it is for white women.

However, for any reasonable threshold, demographic differences in error rates (the gaps between the curves) tend to be quite small. The FMR difference between white and black males for a given algorithm and operating threshold might be sizable in relative terms, but in absolute terms it might produce, say, 4 false positives for white males and 5 false positives for black males. That’s a 25% discrepancy, but in practice both sets of false positives should be ruled out by properly trained facial forensic examiners.7 Moreover, these differences have been shrinking consistently. Last year, and for the first time ever, NIST reported a performance variance of just 0.0001 across racial and gender groups.

What is more important to point out is that NIST evaluates hundreds of FRT systems, submitted by vendors, research labs, and even a few universities across the world (anyone is free to submit an algorithm), and there is a very long performance tail. The large majority of the systems that are tested underperform badly by comparison to the leaders (which shows that FRT has not been commoditized yet). If we restrict attention to the best-performing systems that make up the top 10 or 20 participants, which tend to come from established industry leaders like Idemia, Cloudwalk, or NtechLab, the results (pp. 343, 337, and 350, respectively, for these three vendors) tell a very different story: FMR values are essentially identical across all different groups.

Indeed, some of the best new algorithms that were only developed recently, and which NIST only started to evaluate in 2023, like Paravision (p. 351) and Megvii (pp. 347–348), have either identical FMRs across all demographic groups or actually have lower FMRs for some historically disadvantaged groups (e.g., black males have lower FMRs than white males). For example:

So, for this problem anyway, regulation makes good sense because it is not only ethically imperative but also technically feasible, precisely focused, and enforceable: FRT systems deployed by law enforcement must be shown to have error rates that are invariant across demographic groups. And these are, in fact, requirements that have already been legally imposed in some states. For example, a state law passed in Virginia in 2022 with bipartisan support (Code §15.2-1723.2) requires that

Any facial recognition technology utilized shall utilize algorithms that have demonstrated (i) an accuracy score of at least 98 percent true positives within one or more datasets relevant to the application in a NIST Face Recognition Vendor Test report and (ii) minimal performance variations across demographics associated with race, skin tone, ethnicity, or gender. The Division shall require all approved vendors to annually provide independent assessments and benchmarks offered by NIST to confirm continued compliance with this section.

The law also constrains the use of FRT to 14 specific scenarios; it altogether bans real-time use of FRT; and it requires police departments to publicly disclose if and how they are using the technology. The ACLU opposed the law.

How about impact? Recall again that plain poor coding has caused many hundreds of deaths, thousands of injuries, and trillions of dollars in damages. The use of FRT so far has led to 7 documented cases of false arrests:

Robert Williams, a resident of Detroit who was wrongfully arrested in 2020 for a 2018 theft of watches, spent a night in jail, and is now suing the police department for wrongful arrest and also lobbying for a ban on FRT.

Nijeer Parks, a New Jersey man who was wrongfully arrested in 2019 and spent 10 days in jail, and is also now suing.

Michael Oliver, another Detroit man, was wrongfully accused of theft but was not arrested and his case was later dropped. He sued the city after his arrest.

Randal Reid, who was mistakenly arrested in Georgia in 2022 on a warrant issued in Louisiana on suspicion of using stolen credit cards, and spent 6 days in detention. He also sued.

Alonzo Sawyer, falsely arrested in 2022 for assault. This case is more complicated because the positive identification that led to the arrest was actually made by a human, Sawyer’s former probation officer who identified Sawyer from footage of the incident; the FRT had only provided a match as a lead. A week after that, the probation officer contacted the police department to express doubt about his identification, at which point the police released him and then dropped the charges.

Harvey Eugene Murphy Jr. was falsely arrested in October of 2023 “on charges of robbing a Houston-area Sunglass Hut of thousands of dollars of merchandise in January 2022, though his attorneys say he was living in California at the time of the robbery.” He is suing.

Porcha Woodruff, wrongly arrested in 2022 in Detroit and held for 11 hours on suspicion of carjacking and robbery. She sued the city of Detroit in 2023. All 7 arrests were made on the basis of false positive matches from FRT systems, and it appears that all of them, with the exception of Sawyer’s case, violated the requirement that FRT matches are to be used only as investigative leads and cannot serve as probable causes for arrest, thereby leading to well-deserved lawsuits. It is possible that other similar incidents have taken place but were not publicized, but if so, there is no reliable way to estimate their number. These 7 cases seem to be the only ones on the public record as of early 2024.

Of course, every single mistaken arrest is an injustice that must be treated with the utmost seriousness. But if we are to assess the situation from a clear-eyed perspective then we must take into account the full spectrum of FRT’s impact, not just the select high-profile cases that have dominated public discourse. To that end, it should be pointed out that FRT has been used to exonerate falsely accused suspects and bring to justice a wide array of criminals, from child traffickers to killers (including mass shooters), identity thieves, sexual predators, and stalkers. It has also been used to identify missing persons (e.g., India has used FRT to successfully identify thousands of missing children), accident victims “lying unconscious on the side of the road,” a “woman whose dismembered body was found in trash bags in two Bronx parks,” a woman hospitalized with Alzheimer’s, and so on. These successes naturally resonate with the public and cannot be ignored. Indeed, the sort of blanket bans of FRT that are advocated by some organizations and activists, and which have already been enacted in places like Portland, San Francisco, Boston, Madison (Wisconsin), and about 16 other cities, are not reflective of the majority’s attitudes towards the issue. In the U.S., for instance, more Americans see the widespread use of FRT in policing “as a good than bad idea.”

And while direct comparisons with other identification procedures are difficult to make, some educated conjectures can broaden the context of the discussion. Between local and federal law enforcement agencies, many millions of FRT searches have been made to date,8 but let’s assume the total is only one million. These searches have led to 7 wrongful arrests that we know about, but let’s further assume that the real number is two orders of magnitude greater, 700. This would mean that 0.07% of the total number of searches led to instances of wrongful detention. In contradistinction, mistaken eyewitness identifications have “contributed to roughly 71% of the more than 360 wrongful convictions in the United States” that were overturned by DNA evidence, and have no doubt helped to put many more innocent people behind bars.

Other conventional identification methods, such as police showups and lineups, are much more susceptible to error. Psychologist and eyewitness identification expert Gary L. Wells performed an analysis of 6,734 real-life attempts by eyewitnesses to identify the perpetrator from a lineup. He found that from those witnesses who made an identification, “36.8% picked an innocent filler,” amounting to a false positive rate of 36.8%.

When an objective evaluation of FRT is carried out along these lines, by comparing its effectiveness against the alternatives, weighing its failures against its successes, and putting complaints of bias and inaccuracy into proper context, blanket calls for sweeping bans of the technology become unconvincing. Some regulation is indeed needed (for example, to ensure that the state does not use FRT for politically motivated surveillance and that law enforcement only uses SOTA systems that do not suffer from demographic bias), but that regulation can be precise and narrow and need not involve any grand AI-in-general themes.

In the next write-up we will turn our attention back to the original question posed by the first article.

These fairness metrics will be formally defined and extensively discussed in a subsequent article. Their technical details are not essential for present purposes.

A 2018 paper purported to show that COMPAS is only slightly better at predicting recidivism than crowdsourced volunteers without any background in criminal justice. However, the volunteers in that study were told exactly what to focus on—seven pieces of information, such as age and number of previous convictions, which were known ahead of time to be correlated with recidivism. This is not how legal professionals go about assessing recidivism risk in practice. Professionals have access to a wide range of information, and that is indeed part of the problem, as noted in the first article of the series, because additional information

tends to spread their attention thin and can also invite them to consider unnecessarily “complex combinations of features,” as Kahneman points out (p. 224)

as well as inviting the plethora of cognitive and emotional biases we've already discussed.

In that respect they are similar to anonymous tips, which can be explored as deemed necessary by the judgment of the investigating officers, but do not themselves constitute evidence. (Note, however, that testimony provided by credible informants who have assisted investigations in the past does constitute sufficient grounds for probable cause, as affirmed by the Supreme Court’s 1972 ruling in Adams vs Williams. Assuming that FRT accuracy continues to improve, it is reasonable to expect that high-confidence positive identifications by FRT systems might eventually play an instrumental evidentiary role on their own.)

Already in 2016 a study by the Georgetown Law Center on Privacy & Technology had estimated that “one in two American adults is in a law enforcement face recognition network,” and participation has increased significantly since then.

Of course, many people are arrested but never charged (about 15 to 20% of all arrests are not prosecuted), and many of those who are charged are never convicted, so it is far from clear that retaining and using their fingerprints indefinitely afterwards is either reasonable or fair. IAFIS policy is to retain all fingerprints that are entered into the database until the suspect is 99 years old or for 7 years after notification of death, on the grounds that every interaction with the criminal justice system must be recorded. More importantly, if arrest rates have been historically lopsided against minorities or have been inflated due to an inordinate number of low-level drug-related offenses such as marijuana possession or income-related offenses such as vagrancy (as was indeed the case in the US), then the fingerprint database itself will be biased, as it will contain disproportionately large numbers of entries from minorities and poor people. More than 80% of all arrests in the US are for low-level offenses such as “drug abuse” or “disorderly conduct”; less than 5% are for serious violent offenses (what the FBI refers to as violent “Part I” offenses).

Location, in particular, and an individual’s movements over time, even when they are out in public spaces, have been recognized by the Supreme Court as revealing private information. Therefore, the government must secure a search warrant to track this information. This happened in 2018 in the case of Carpenter v. U.S., in the context of cell phone location data.

Whether training law enforcement personnel in tasks like facial image comparison and identification improves their accuracy has been debated. However, recent results indicate that trained facial examiners achieve the same accuracy as naturally skilled “super-recognizers” (see below) and deep neural networks. Moreover, they display distinct error patterns; trained forensic examiners were found to be “slow, unbiased and strategically avoided misidentification errors,” whereas super-recognizers were “fast, biased to respond “same person” and misidentified people with extreme confidence.” The findings support the implementation of regulations to mandate appropriate training for those investigators “in the loop” who are tasked with analyzing FRT-generated candidate lists. At present there are guidelines calling for training, that have been recommended by the FISWG, the Facial Identification Scientific Working Group, but they are not enforced and it appears that many police departments do not follow them. At the federal level, 7 different agencies “initially used [FRT] without requiring staff take facial recognition training,” but “two agencies require it as of April 2023.” Recommendations made by the U.S. Government Accountability Office (GAO) in the fall of last year (September 2023) call for training requirements and safeguards to protect civil liberties.

Super-recognizers, by the way, are people who are exceptionally accurate at identifying unfamiliar faces (outperforming normative controls by two standard deviations) without any special training. They are a relatively recent phenomenon that seems to have been discovered over the last 10-15 years (the Google Ngram Viewer shows that the term had zero occurrences before 2008), and they are now beginning to be recruited and deployed more widely in law enforcement, particularly in the UK. Some psychologists, while recognizing the existence of super-recognizers, have recommended exercising caution regarding their deployment, given that their performance is yet to be thoroughly studied and understood.

Clearview alone (the FRT company that everyone loves to hate), claims to have been used about a million times.