Anthropic just released a new model, Claude 3.5 Sonnet, to much fanfare, claiming that it “sets new industry benchmarks for graduate-level reasoning” and that “it is now the most intelligent model in the world”. Gushing commentators are rushing to sing its praises, referring to it as “the new gold standard” that “sets the bar high”, is “smarter” than any other LLM, “exceling in tasks that demand deep reasoning”, displaying “sophisticated reasoning,” and so on and so forth.

What all that really means ultimately is this: The model has improved previous SOTA performance by a few percentage points on certain multiple-choice benchmarks.1 By now, however, everyone who actually works in the field knows fully well that such benchmarks and the various leaderboards built around them are practically useless, for a number of reasons ranging from data contamination (intentional or not) to brittleness to the fact that the right answer might be given for the wrong reasons (it’s impossible to tell without explanations) to a host of other issues. I’ve discussed these issues before, and many others have as well. Yet tech companies continue to orchestrate gargantuan PR stunts based on these benchmarks.

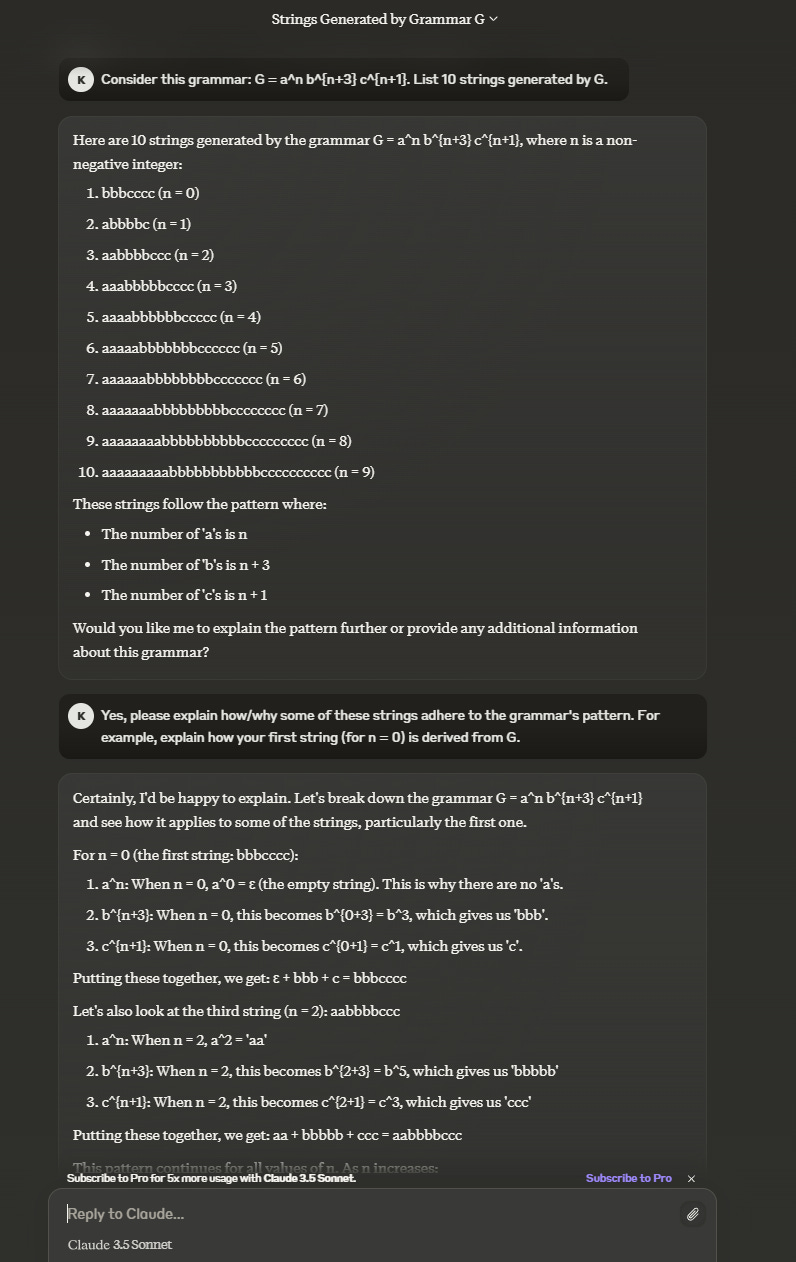

As much as I hate to rain on Anthropic’s parade, a few quick tests paint a very different picture:

This is “graduate-level reasoning”?

It would be easy to go on with additional examples, but the point is clear enough. Standard benchmarks are broken and essentially useless in assessing model quality. The hype built around them is pure marketing fluff.

It’s also faster and cheaper, which is great, but my focus here is ability, not cost or speed.